AP+ Testing Portal

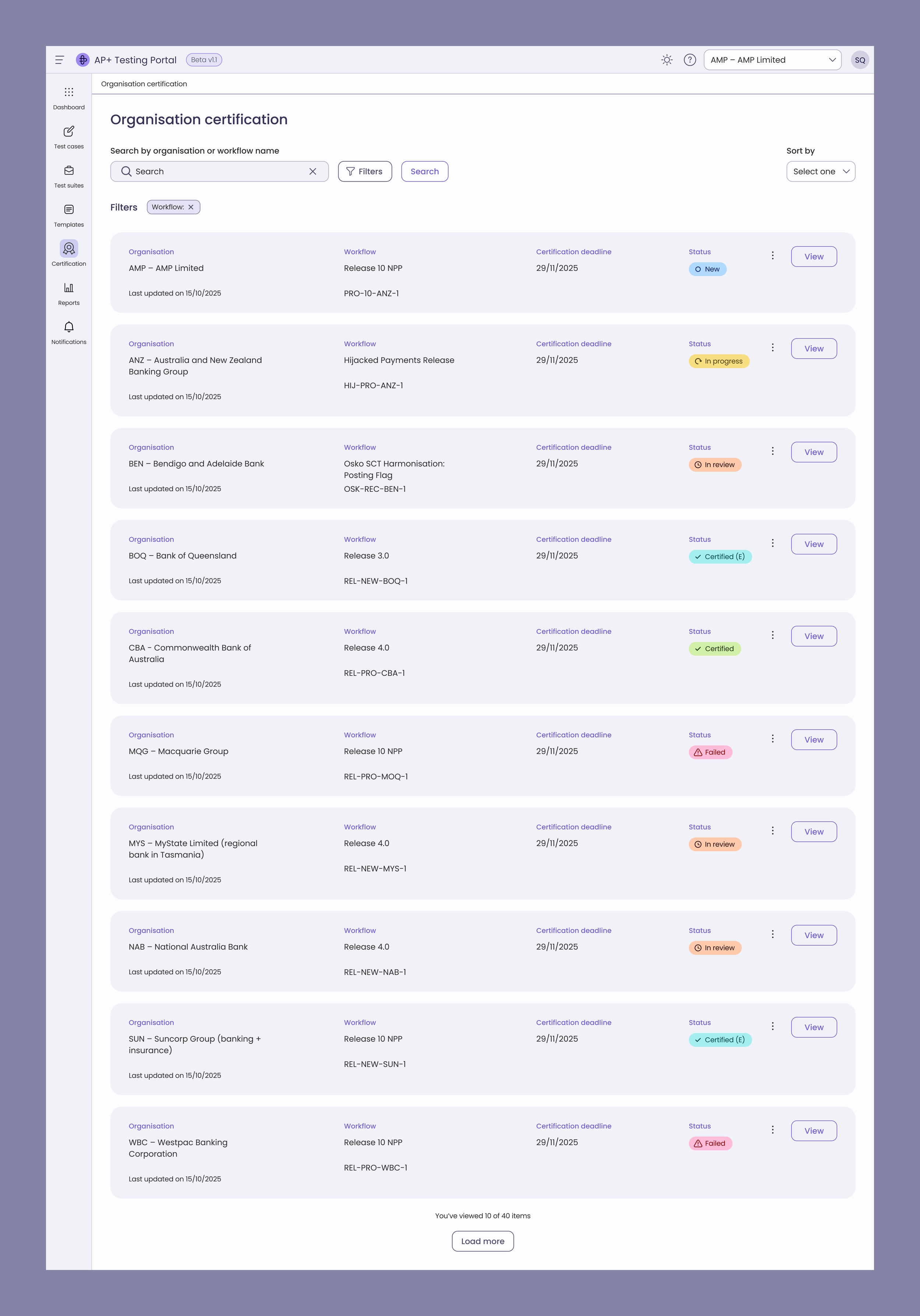

The AP+ Testing Portal at Australian Payments Plus is a non-mobile first web application built to replace manual, spreadsheet-heavy certification workflows.

It enables banks, fintechs, and merchants to self-serve certification against AP+ payment products, while giving AP+ admins structured tools for workflow templates, attestations, exceptions, and reporting.

By digitising certification, the portal reduced manual effort, improved transparency, and accelerated member onboarding across the AP+ ecosystem.

Problem

Before the Testing Portal, certification relied on excel spreadsheets and back-and-forth communication.

Participants: faced slow and long processes.

AP+ Testers: struggled with tracking attestations, exceptions, and progress through manual processes.

AP+ overall: suffered from integration delays and higher operational costs.

The challenge

To build a scalable, self-service web application that could handle complex certification workflows while meeting compliance standards (e.g., Reserve Bank of Australia).

To set up a design system as well as rework phase 1 designs. The design system would facilitate for a smooth design to code handoff using Figma MCP server and ai.

Delivery

Contracted for the delivery of phase 2 of the Testing Portal, I helped delivered two key pillars:

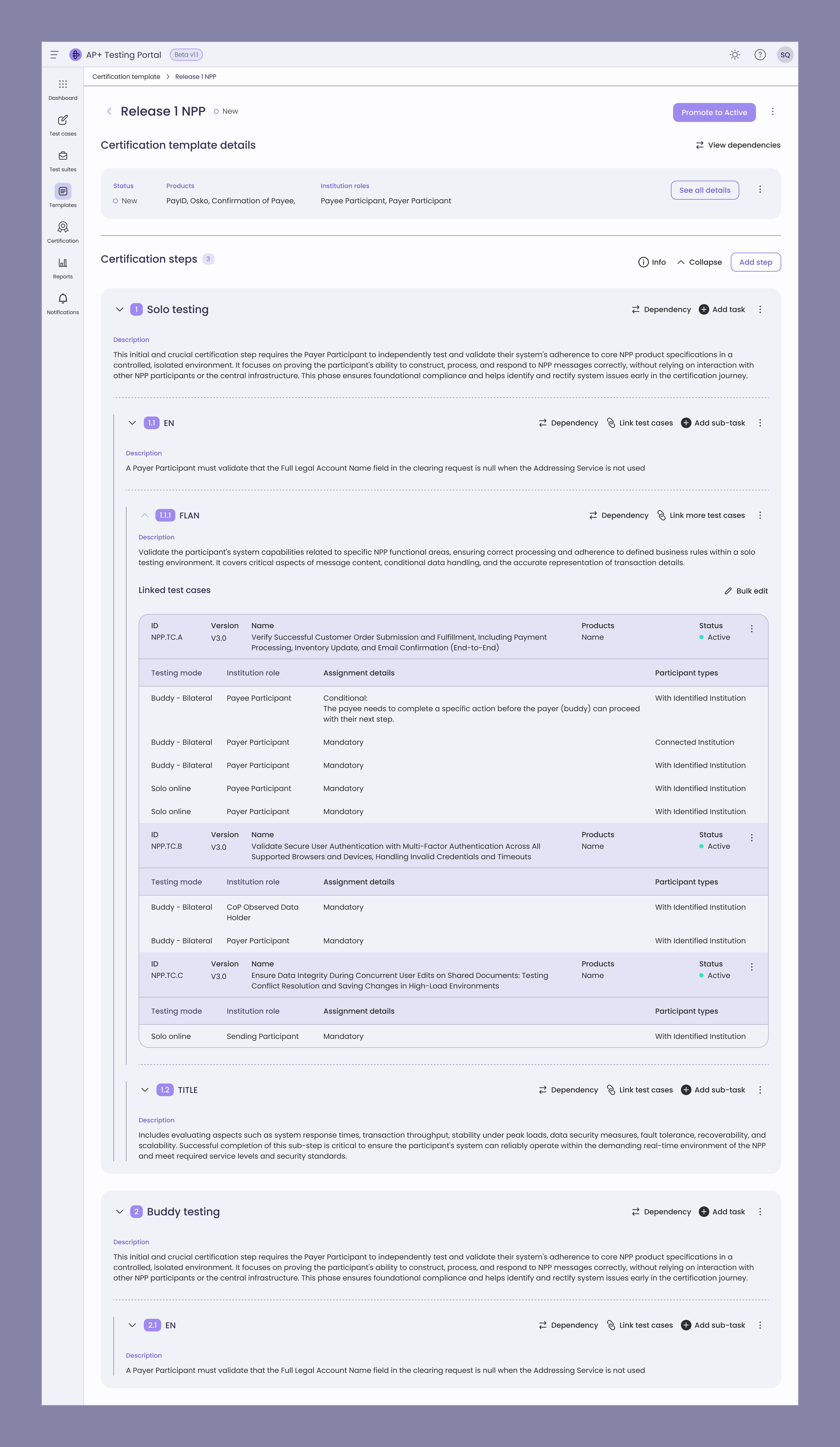

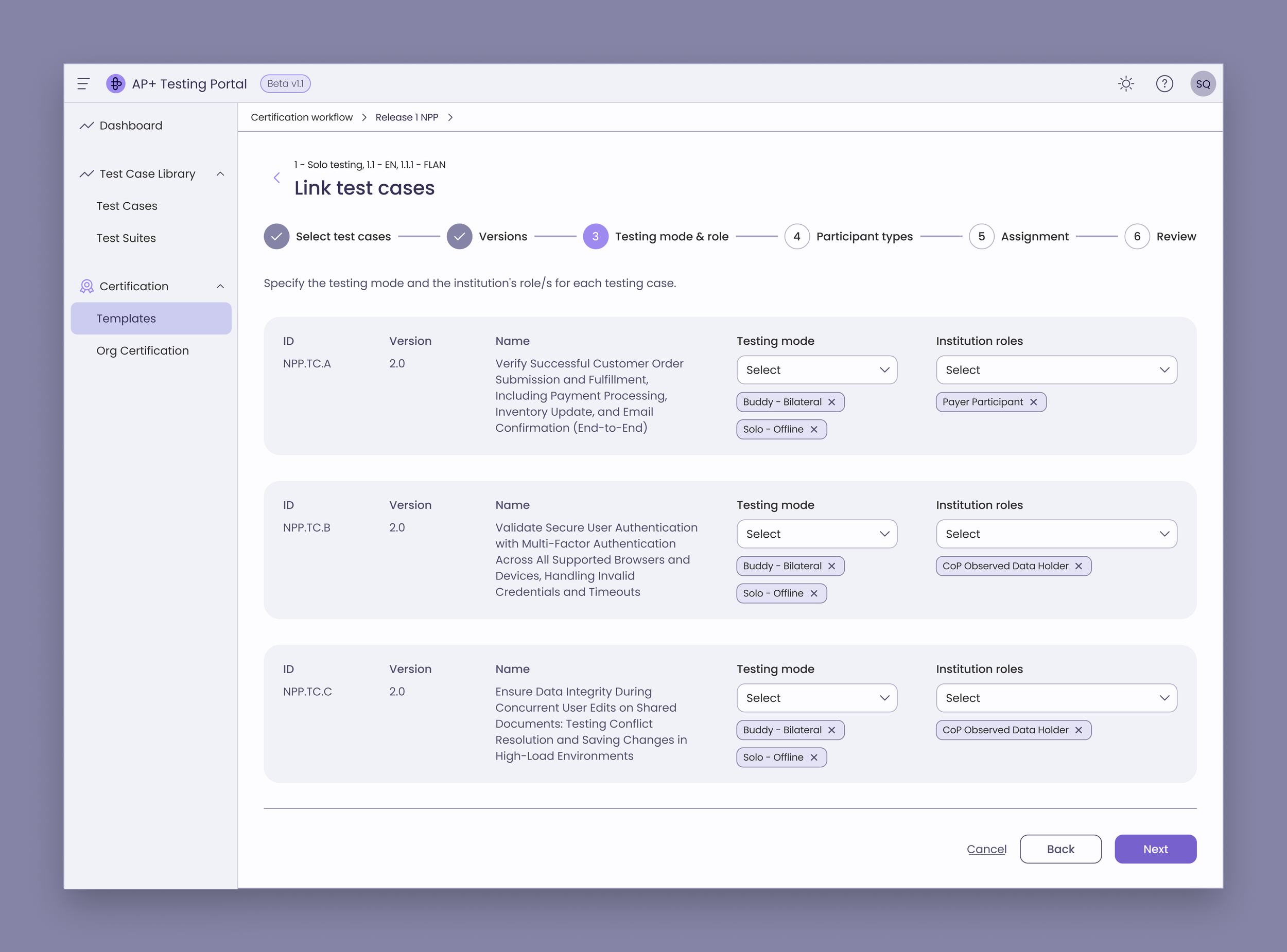

Certification Workflow Template Management

AP+ Testers can create, edit, and manage templates with steps, tasks, test case linkages, dependencies, and reports.

Member Certification

Participants can view assigned workflows, complete attestations, raise exceptions, and track progress.

Built secure access hierarchies for managing permissions and documentation.

AP+ Testers can create, edit, and manage templates with steps, tasks, test case linkages, dependencies, and reports.

Approach to discovery

I joined the team at a time where the project is at Phase 2 — certification and organisation workflows. Phase 1 had been completed which was Test Case and Test Suite Management.

There was no budget for discovery and user research. The following details the approach I had taken within the scope.

User Journey Mapping

User feedback

Landscape review (study similar products and workshop the best ideas with stakeholders)

Scope

Stakeholders management

UX facilitation

Cross-collaboration

Content strategy

Information architecture

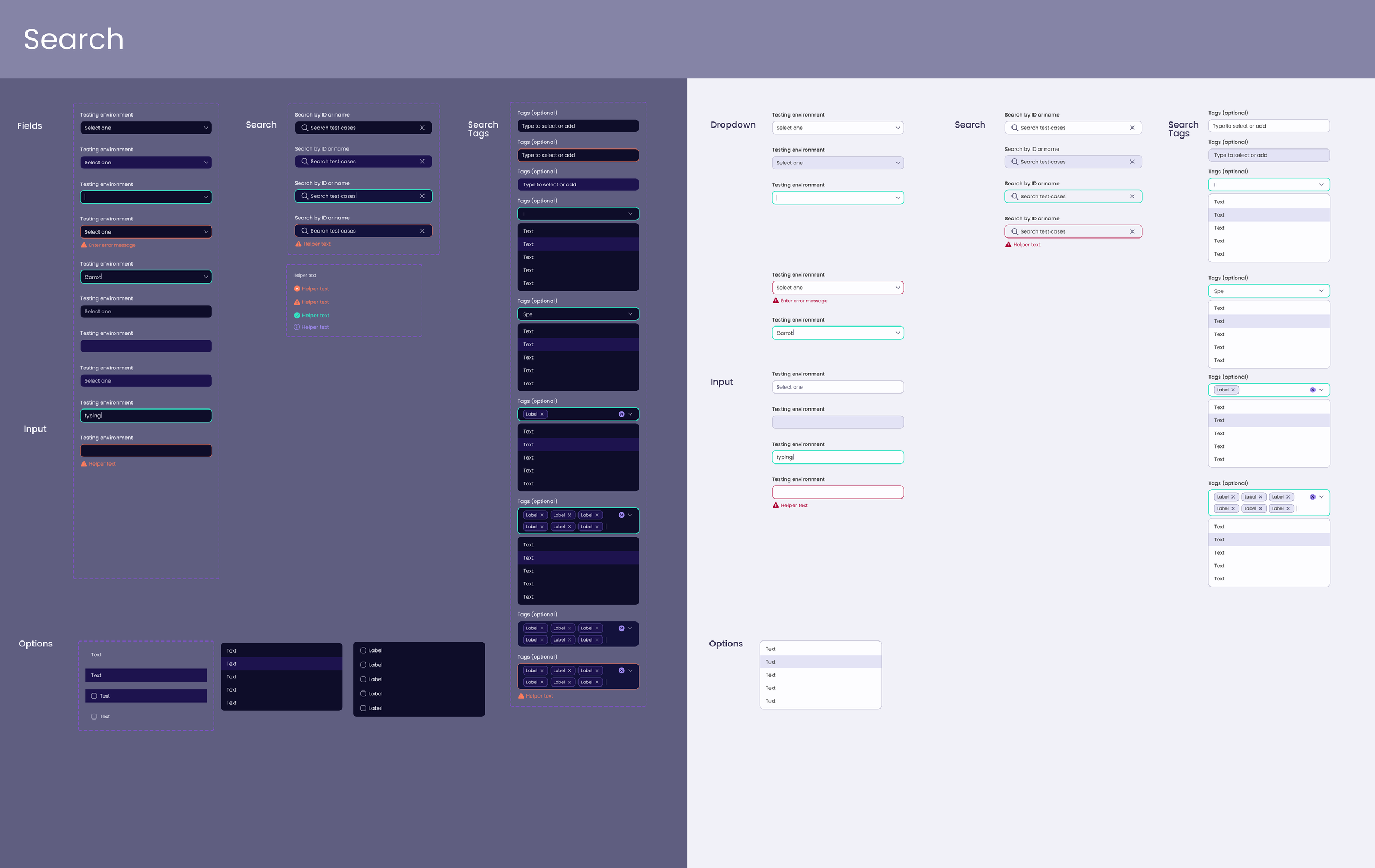

User interface and detailing

Figma-to-code workflow

User Journey

Facilitated stakeholder workshops to align on goals, constraints, and user needs for phase 2 of the project

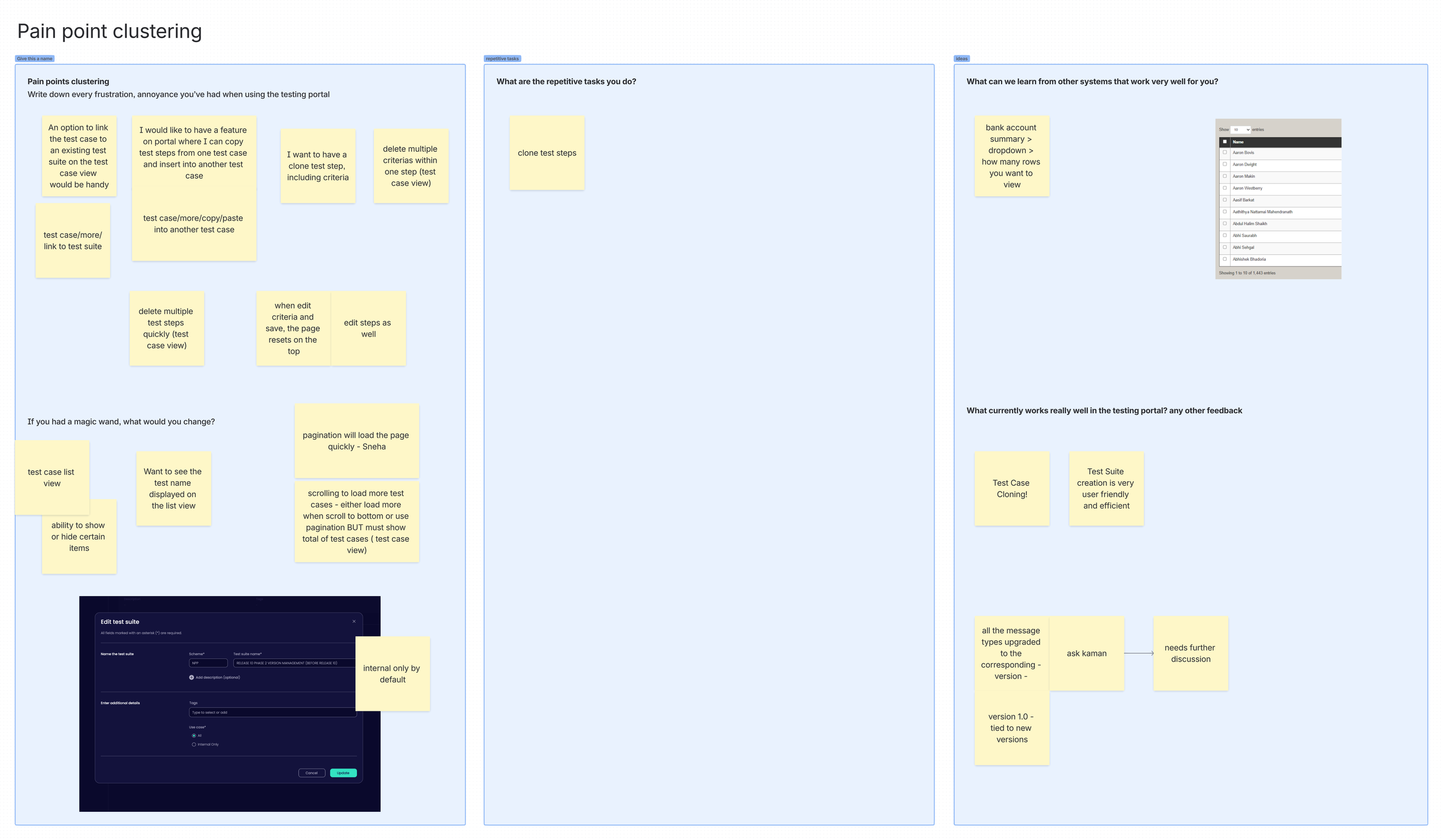

User Feedback

As part of Phase 2, I ran feedback workshops with internal testers to understand how they used Phase 1 features (Test Case and Test Suite Management). The goal was to identify real-world frustrations and repetitive tasks that would inform the more complex Certification Workflow Management design.

Key insights included:

Bulk action gaps: need for faster clone, copy/paste, and multi-delete.

Navigation issues: the page resetting after edits and difficulty scrolling large lists.

Visibility needs: showing test names in list view and toggling field visibility.

Performance expectations: smoother pagination and load times for large datasets.

Validation clarity: clearer distinction between blocking errors and warnings.

These insights allowed Phase 2 designs to build on proven strengths while addressing usability gaps, creating a smoother transition from test case management to certification workflows.

Information Architecture

Based on the findings, mapped out how content and functions are organised before deciding how they look. The purpose was to prevent design from being driven by visuals instead of logic. This also ensure new features can fit into the system logically without breaking hierarchy

Identifies navigation gaps and redundancies early by gathering feedback from the cross-function team especially engineers.

Gives engineers a clear structural map of the product, so design and development decisions stem from the same logic.

Information architecture of the organisation workflow

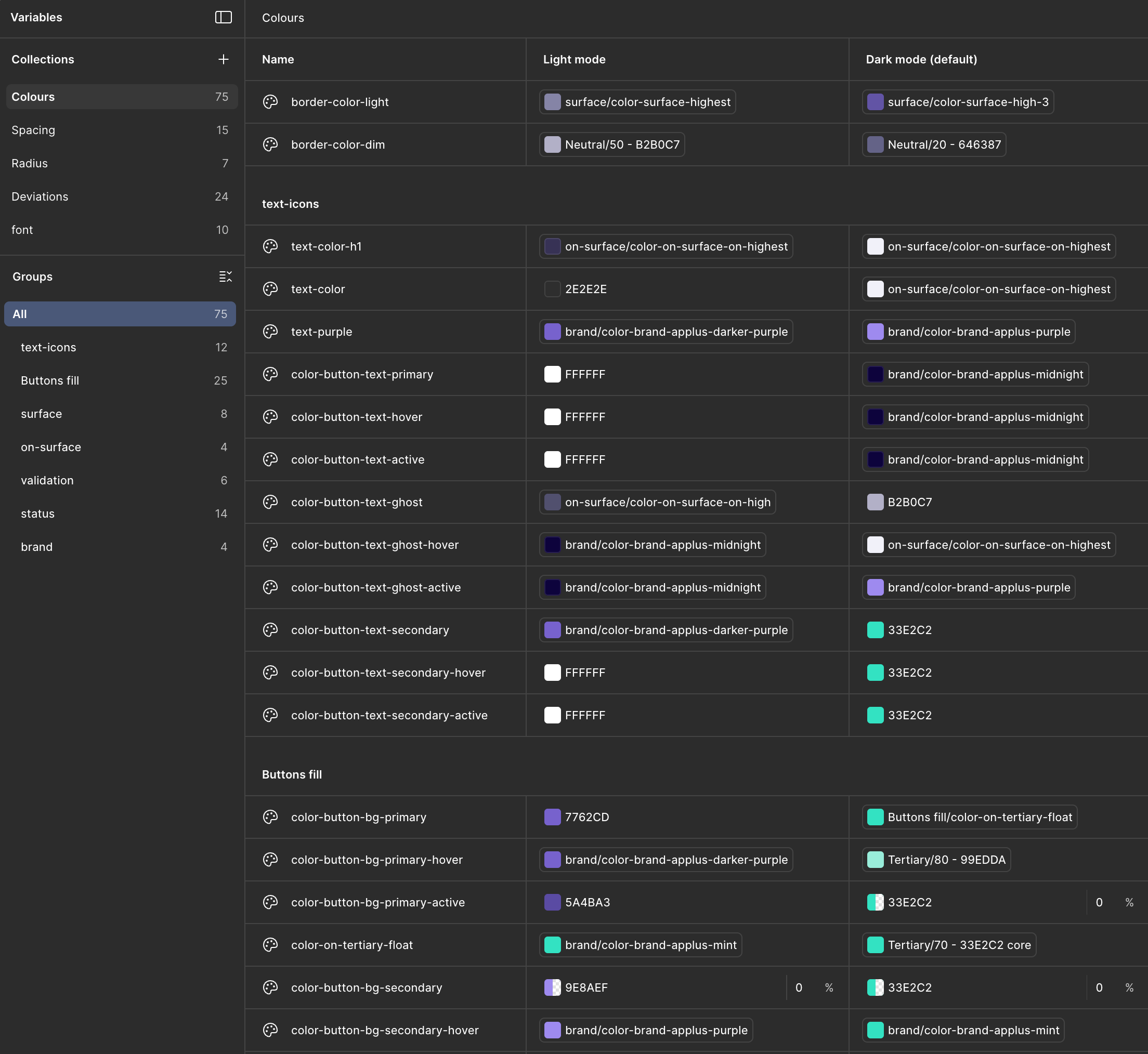

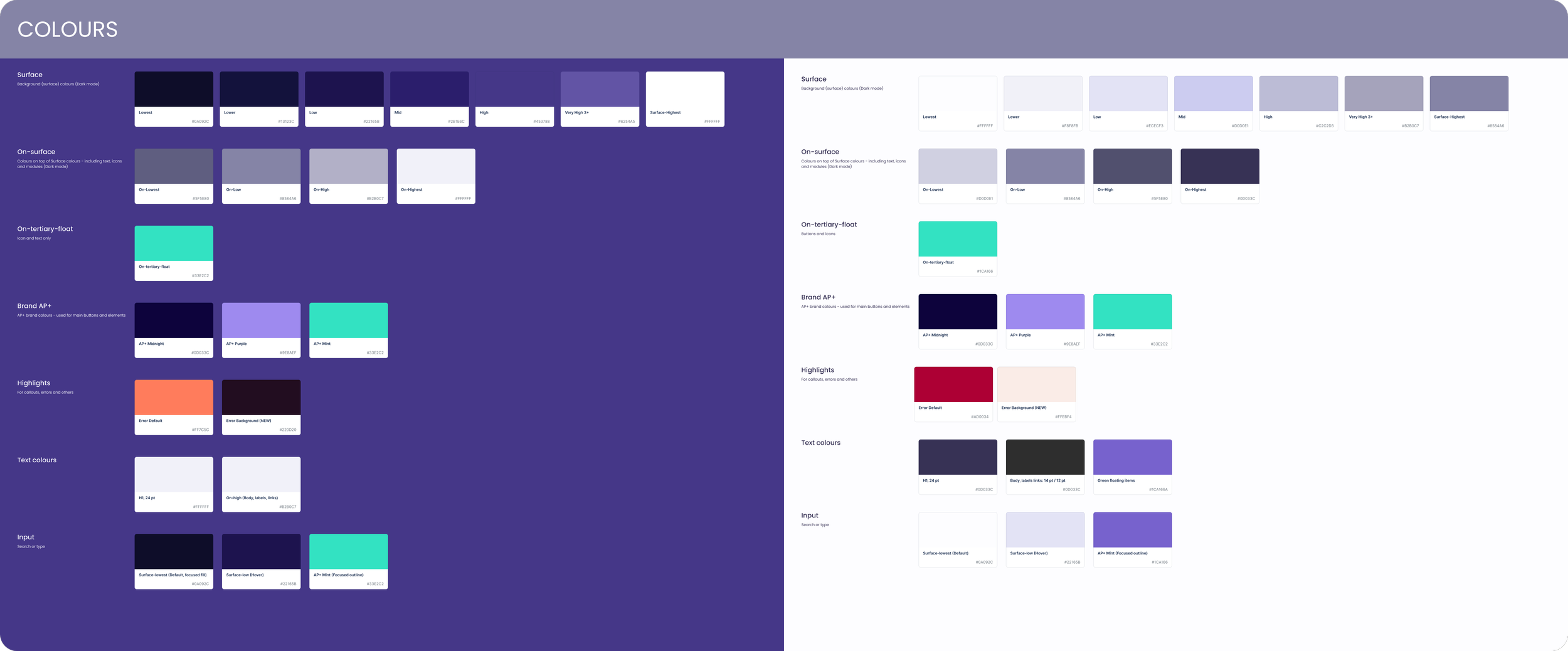

Design System and design to code

Key contributions:

Together with the Frontend Engineer experimented, refined and improved a figma-to-code process using Figma MCP server (Learn more on my substack)

Standardised tokens and design system best practices, reference component and UI patterns in React Aria to enable smooth handover

Collaborated with Frontend Engineer to establish design token naming conventions between Figma tokens and CSS custom properties, making AI-assisted development more reliable and reducing technical debt.

Structured Figma files using atomic design principles, auto layout, layer naming, annotations to enable reliable component translation

Worked with FE Engineer to develop instructional files and prompt libraries that encoded design system principles for AI code generation

The instructional files include instructions such as responsive patterns, accessibility requirements, and component hierarchy

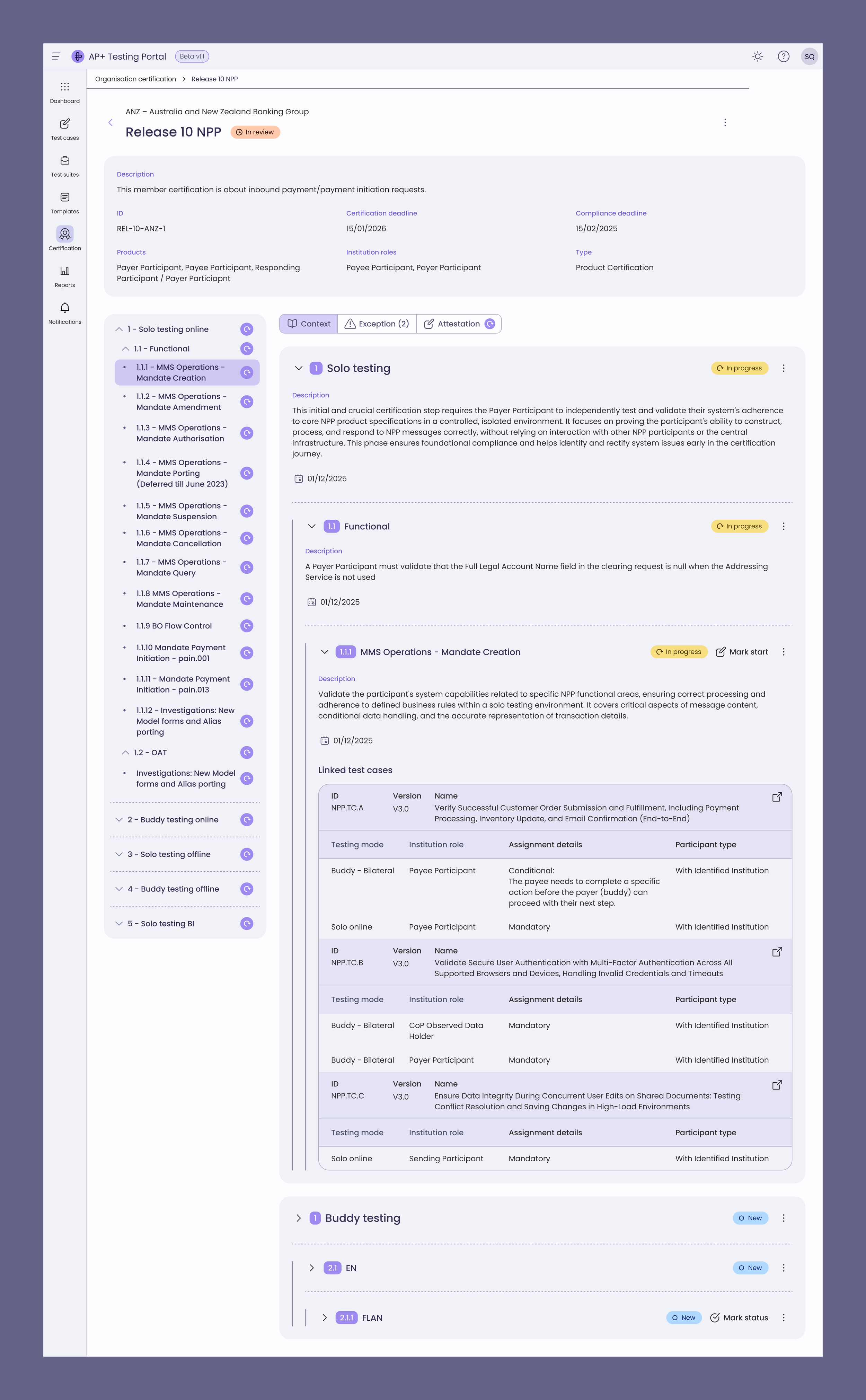

Member Certification

User Problems

No single view of attested milestones, uploaded evidence, or exceptions.

Inconsistent review actions and comment tracking across reviewers.

Limited visibility on member progress and feedback history.

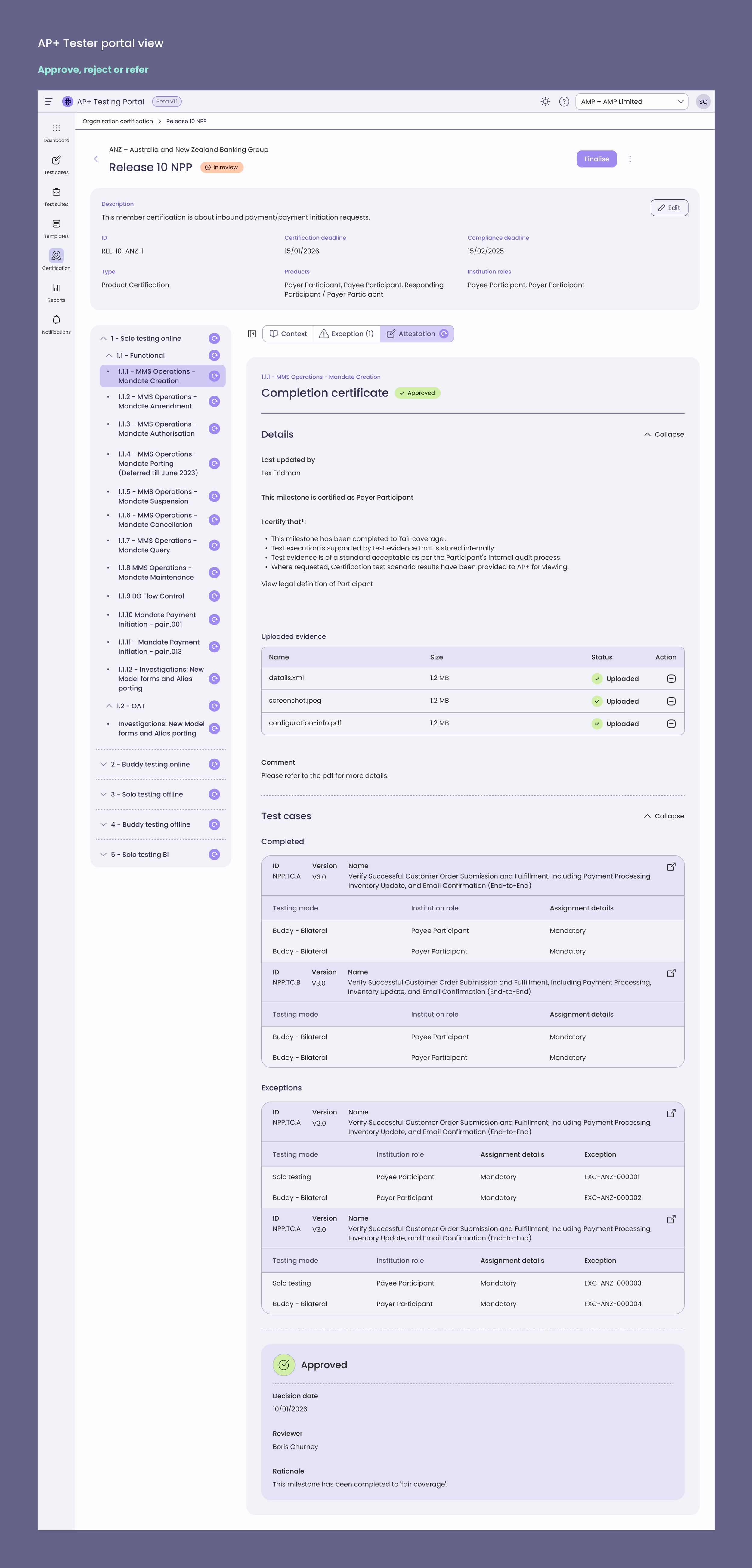

Solutions

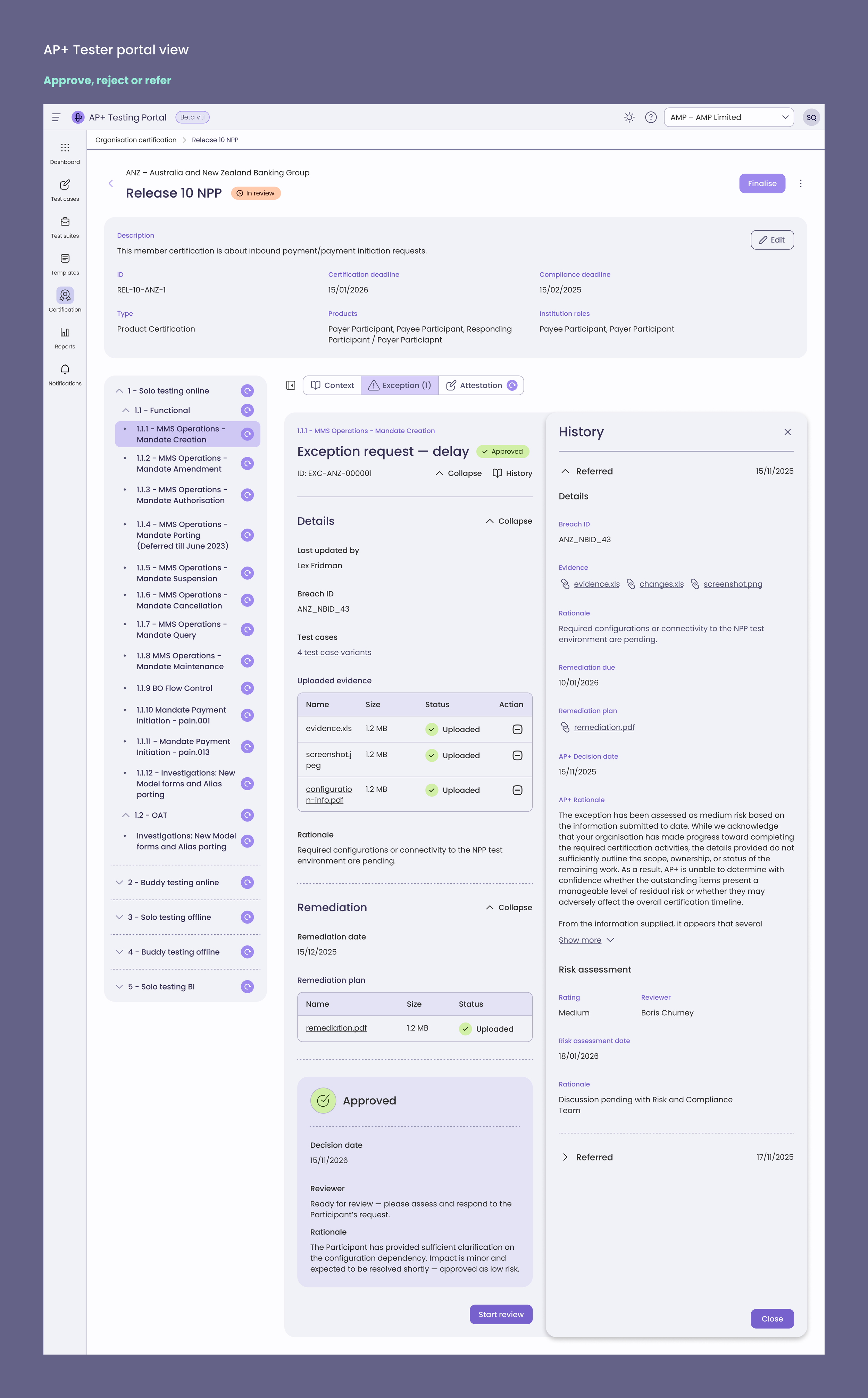

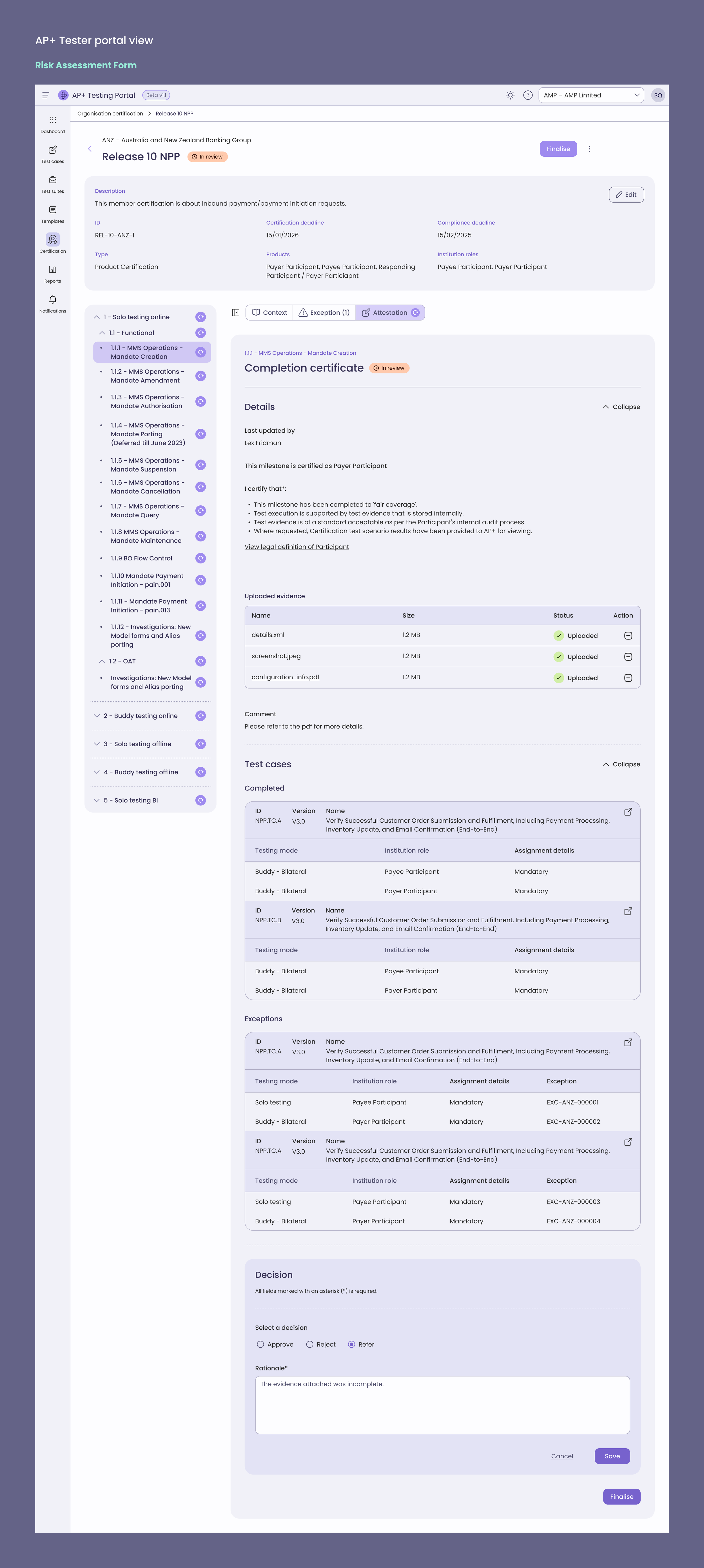

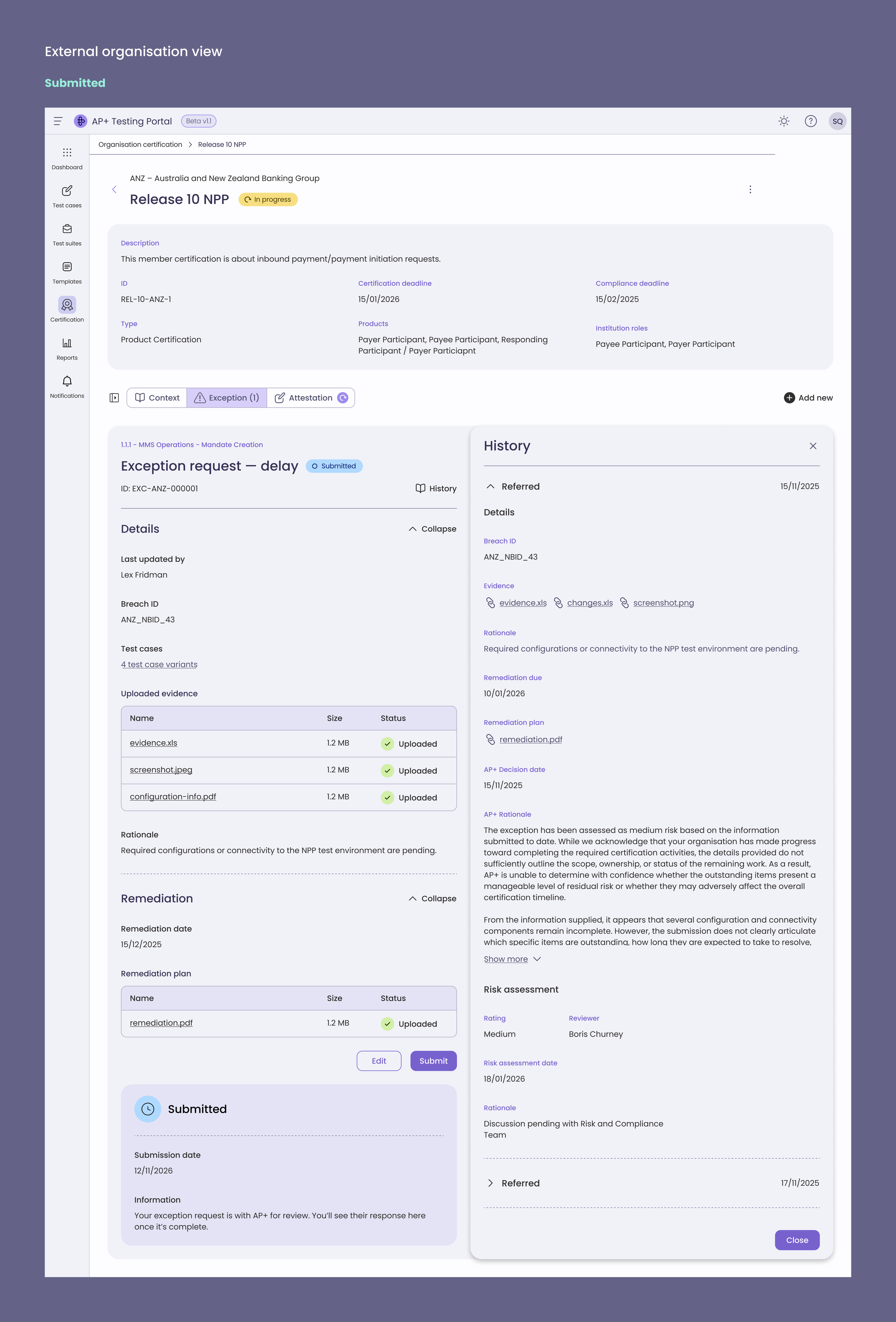

Built a centralised review interface showing context, attested Test Cases, Exceptions, and Evidence in one view.

Added inline decision controls – Approve, Reject, Refer Back – with mandatory comments for traceability.

Introduced a refer-back workflow allowing members to revise submissions without losing version history.

UI Principles

Efficiency: Key actions surfaced inline to reduce navigation and review time.

Transparency: Shared visibility of comments, status, and evidence between both parties.

Consistency: Unified layout and states across attestation and review flows for a seamless experience.

Certification milestone

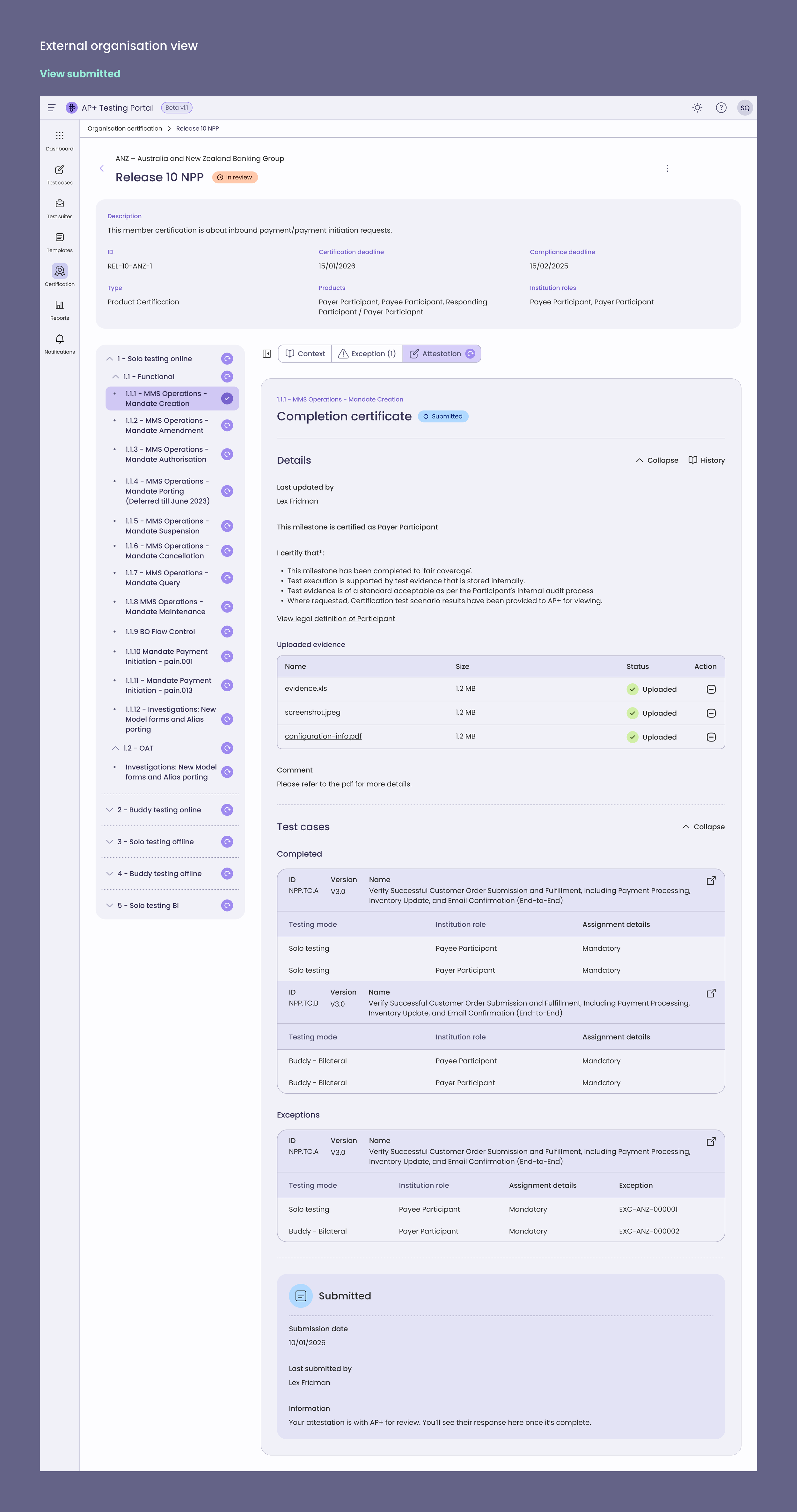

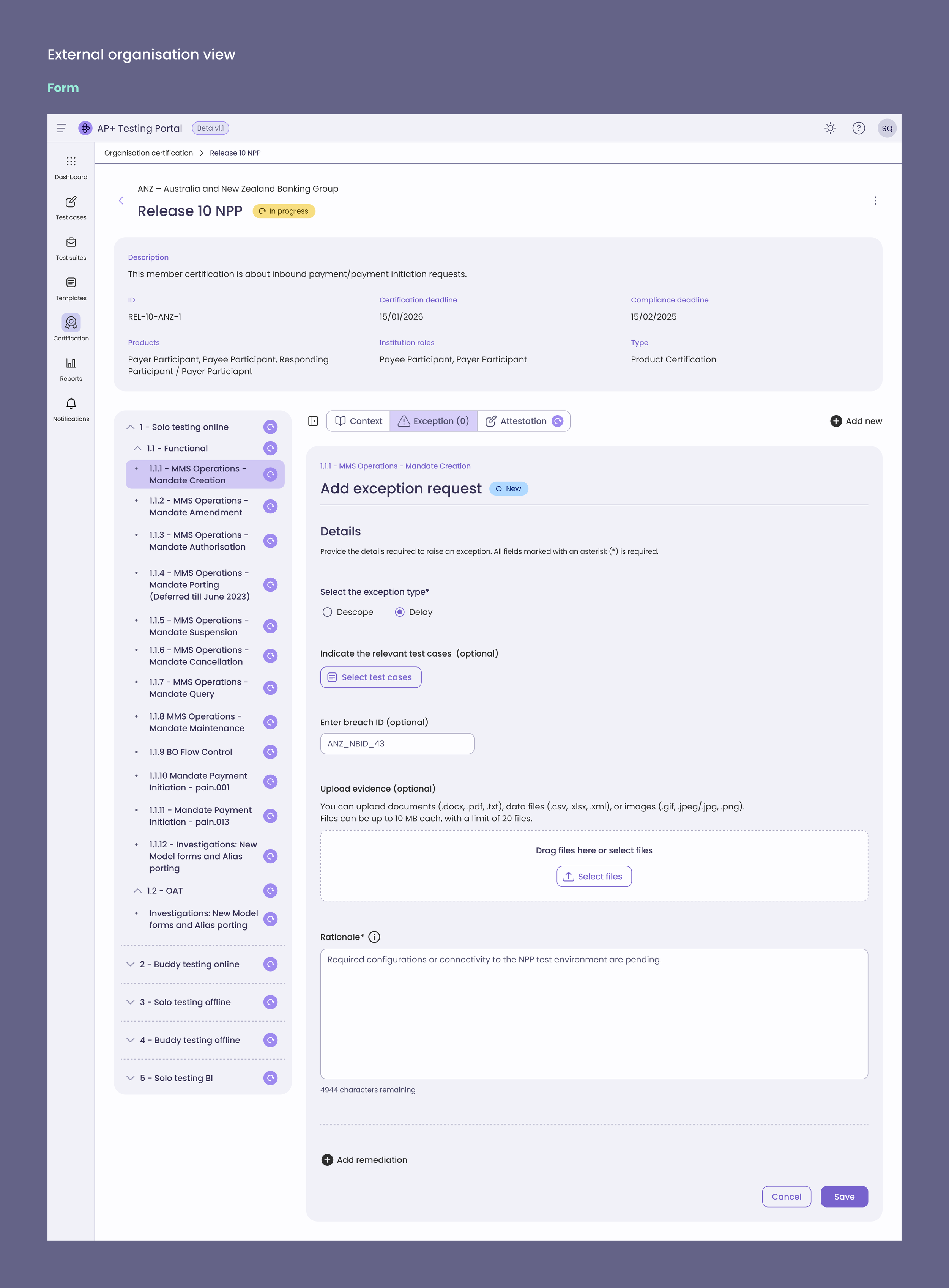

This context page provides external banks and merchants a clear, structured flow for completing each certification milestone by a deadline set by our internal users.

It displays required steps, linked test cases, statuses, and dependencies in one place, guiding Participants through what to do next and enabling them to progress confidently with transparency and consistency.

I designed a scalable structure for showing steps, tasks, and sub-tasks integrated with complex business logic for both external and internal facing views.

This ensures system scalability.

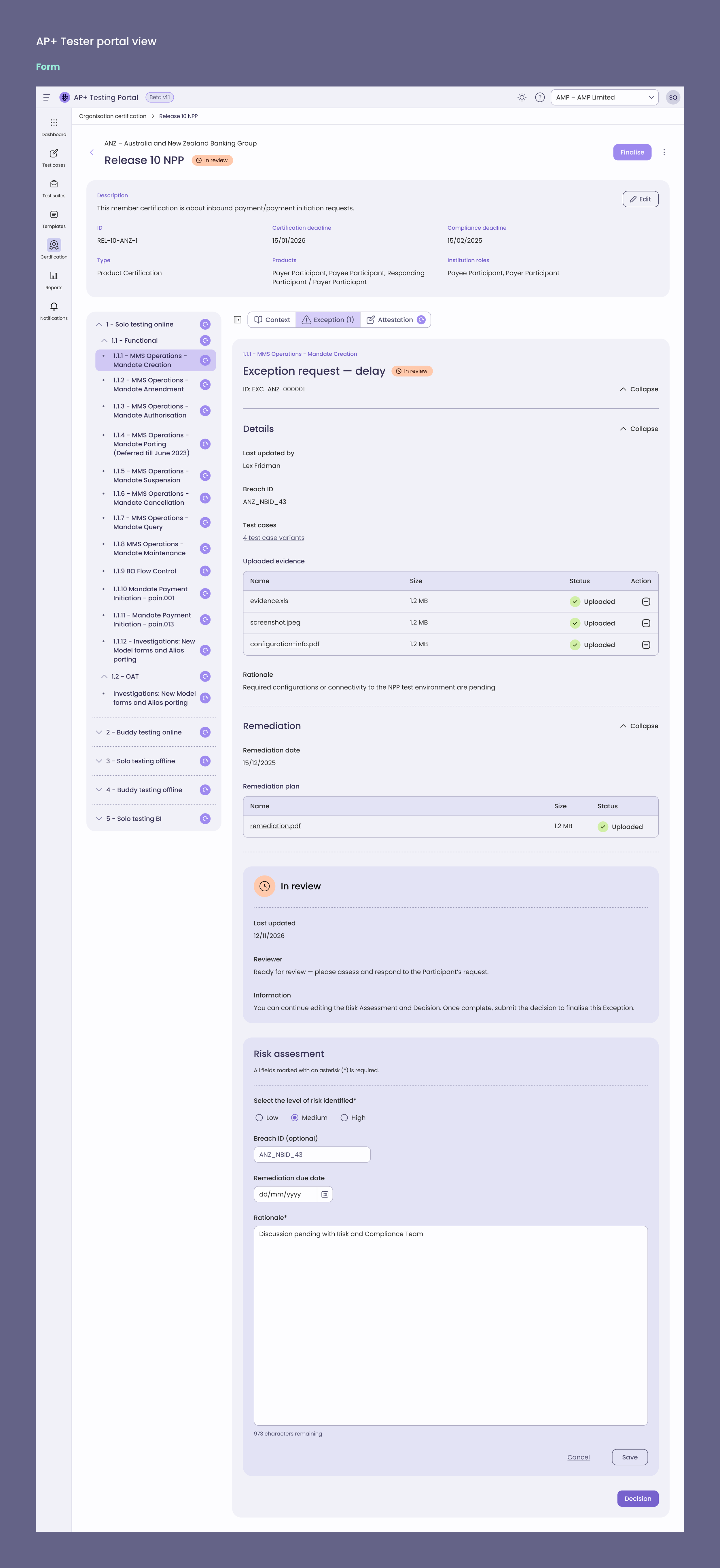

Exceptions

Banks or merchants raising exceptions to test steps that they cannot complete

AP+ Testers approving the exception

Attestation

Banks or merchants attesting to test case completion to compliance standards

AP+ Testers approving the attestation

Impact

20–30% reduction in manual effort for internal testers by replacing spreadsheets with structured workflows.

Enabled self-service certification for banks, fintechs, and merchants, reducing onboarding friction.

Improved cross-functional collaboration by aligning design outcomes with team OKRs.

Designed end-to-end certification workflows supporting regulatory compliance.

Achievements

Refined and improved a figma-to-code process using Figma MCP server (Learn more on my substack)

Delivered production-ready Figma files, ensuring smooth handover to front-end engineers.

Demo best practices and systems thinking to designers and Frontend engineers to improve the above workflows (Dev mode, instructing ai model)

AI-enabled workflows:

OpenAI Custom agent - UX QA partner to produce reports of manual testing

Employ the use of Playwright in the code base to test acceptance criteria.

AI-optimised specifications and context driven coding workflow